Samba-1-Instruct-Router

The Samba-1-Instruct-Router is a Composition of Experts (CoE) router. It provides a single model experience to a subset of the Samba-1 experts that comprise the Enterprise Grade AI (EGAI) benchmark. The EGAI benchmark is a comprehensive collection of widely adapted benchmarks sourced from the open source community. Each benchmark is carefully selected to measure specific aspects of a model’s capability to perform tasks pertinent to enterprise applications and use cases. See Benchmarking Samba-1 for more details.

Tasks

The Samba-1-Instruct-Router can be used for a variety of instruct-based tasks accessed from a single endpoint. It excels at general tasks that instruct models perform, including: generating text across different styles and formats, retrieving detailed information, decision-making support, automating repetitive tasks, writing and content creation, analyzing data, assisting in educational settings, and aiding in programming.

The list below describes some of the tasks for which you can use the Samba-1-Instruct-Router. You can also view Benchmarking Samba-1 for more information on supported tasks and benchmark performance.

-

Tables and databases

-

SQL query generation

-

Table question answering

-

-

General programming tasks

-

Math

-

Tool selection and API usage

-

Tool selection and API usage

-

API function calling

-

-

Parameter identification

-

Writing and brainstorming

-

Guardrails via critiques of LLM responses for the following tasks

-

Summarization

-

Examination

-

Coding

-

-

Medicine, law, and finance domain tasks

Router attributes

The table below describes the experts and components available in the Samba-1-Instruct-Router.

| Version | Experts | Component | Mode |

|---|---|---|---|

Samba-1-Instruct-Router-V1 |

|

KNN-Classifier |

Inference |

Inference inputs

Inference inputs for the Samba-1-Instruct-Router are described below. See the Online generative inference section of the API document for request format details.

-

Number of inputs: 1

-

Input: Prompt or query

-

Input type: Text

-

Input format: String

["What are some common stocks?"]Inference outputs

Inference outputs for the Samba-1-Instruct-Router are described below. See the Online generative inference section of the API document for request format details.

-

Number of outputs: 1

-

Output: Model response

-

Output type: Text

-

Output format: Tokens

[

"\n",

"\n",

"Answer",

":",

"The",

"capital",

"of",

"Austria",

"is",

"Vienna",

"(",

"G",

"erman",

":",

"Wien",

")."

],Inference hyperparameters and settings

The table below describes the inference hyperparameters and settings the Samba-1-Instruct-Router. See the Online generative inference section of the API document for basic parameter details.

| Attribute | Type | Description | Allowed values |

|---|---|---|---|

|

Boolean |

Allows for the user to specify the complete prompt construction. If this value is unset, the prompt construction elements such as the start and stop tag, bot and assistant tag, and default system prompt will be constructed automatically. |

true, false |

|

Boolean |

If set to true, the endpoint will not run completion and simply return the number. |

true, false |

|

String |

Manually select which expert model to invoke. |

|

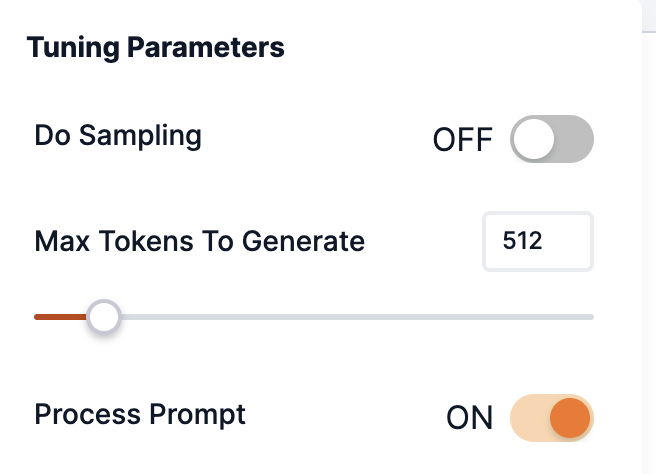

Samba-1-Instruct-Router in the Playground

The Playground provides an in-platform experience for generating predictions using the Samba-1-Instruct-Router.

|

When selecting an endpoint deployed from Samba-1-Instruct-Router-V1, please ensure that Process prompt is turned On in the Tuning Parameters.

|

-

Select a CoE expert or router describes how to choose the Samba-1-Instruct-Router to use with your prompt in the Playground.

-

To get optimal responses from the Samba-1-Instruct-Router in the Playground, use the corresponding task-specific prompt template to format your inputs.