Create and use CoE models

The Composition of Experts is a system of multiple experts, offered in a single endpoint, that enables the orchestration of these domain-specific experts across various fields. After creating and saving your CoE model, adjust the model share settings to share your CoE model with other users and tenants.

This document describes how to:

Create your own CoE model

SambaStudio allows you to create your own Composition of Experts model by creating a new CoE. By selecting and adding a number of experts to your own composition (CoE), you can tailor your new CoE model for your specific tasks.

|

The following considerations apply when creating a new CoE model:

|

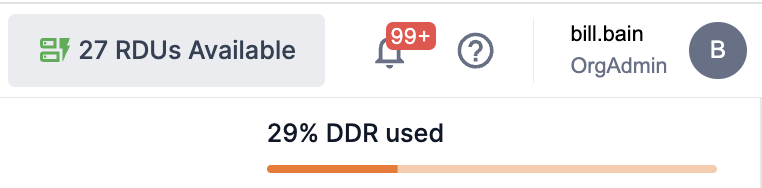

Follow the steps below to create a new CoE model. As you select and add new experts to your composition, the percentage of DDR used will indicate the amount of Double Data Rate (DDR) memory used by the selected experts. Please see DDR usage information for more information.

-

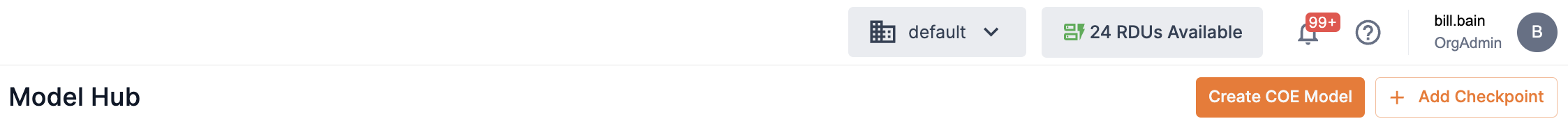

From the Model Hub, click Create CoE Model.

Figure 2. Create CoE model

Figure 2. Create CoE model -

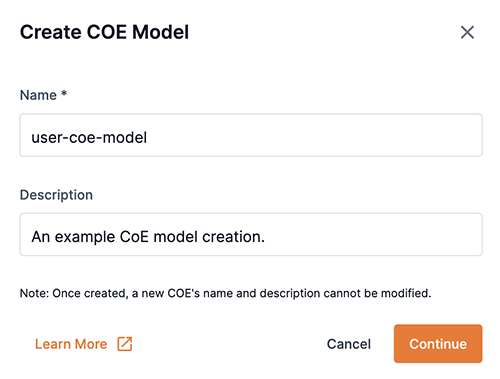

In the Create CoE Model box, enter a name for your CoE model into the Name field. Click Continue to proceed.

Figure 3. Create CoE model box

Figure 3. Create CoE model box -

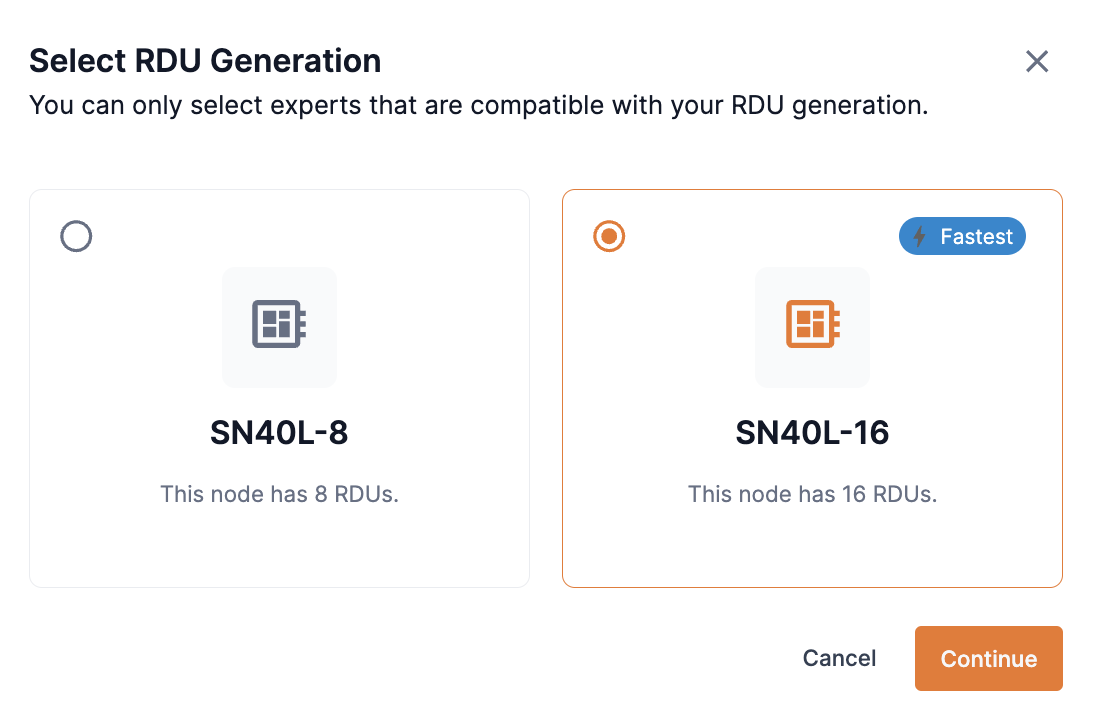

If your SambaStudio environment is configured with SN40L-8 and SN40L-16, the Select RDU Generation box will open.

-

Select one of the options and click Continue to proceed.

-

SN40L-16 will provide faster CoE creation times.

-

You will only be able to add expert models to your Composition of Experts that are compatible with your selection of SN40L-8 or SN40L-16.

-

You can view the currently supported SN40L-16 supported models.

Figure 4. Select RDU Generation box

Figure 4. Select RDU Generation box -

-

-

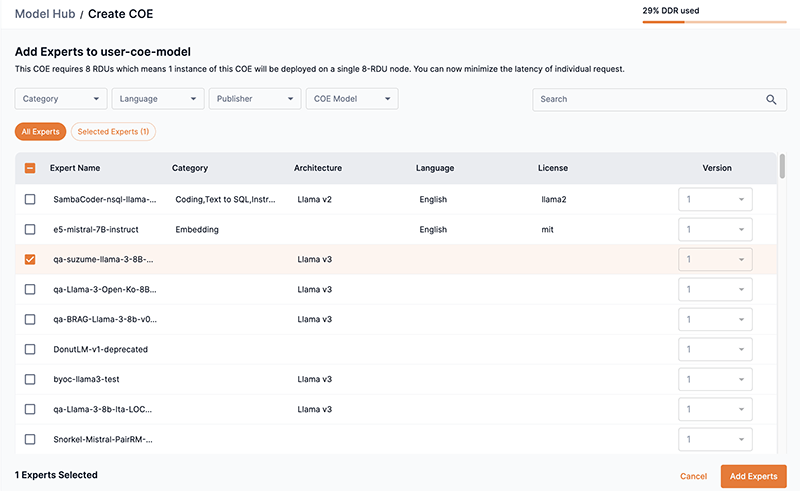

The Add Experts window will open. The Add Experts window allows you to select and add expert models to your Composition of Experts model.

-

Use the drop-down filters, Search box, and Expert buttons to refine the list of expert models displayed.

-

-

Select the box next to the expert(s) you wish to add to your CoE model.

-

As you select and add experts to your CoE model, the % DDR used indicator bar (in the upper right corner as shown in Figure 5) displays the amount of Double Data Rate (DDR) memory used by the selected experts during configuration. If the total number of your selected experts exceeds 100% DDR used, it indicates that the selected experts require more memory than available and you will not be able to proceed. You will need to change your combination of selected experts in order to proceed. See DDR usage information for more information.

-

From the Version column drop-down, you can choose one of the available versions of the expert model (if more than one version is available). The latest version of the model is selected by default.

-

-

Click Add Experts to proceed.

Figure 5. Add Experts window

Figure 5. Add Experts window -

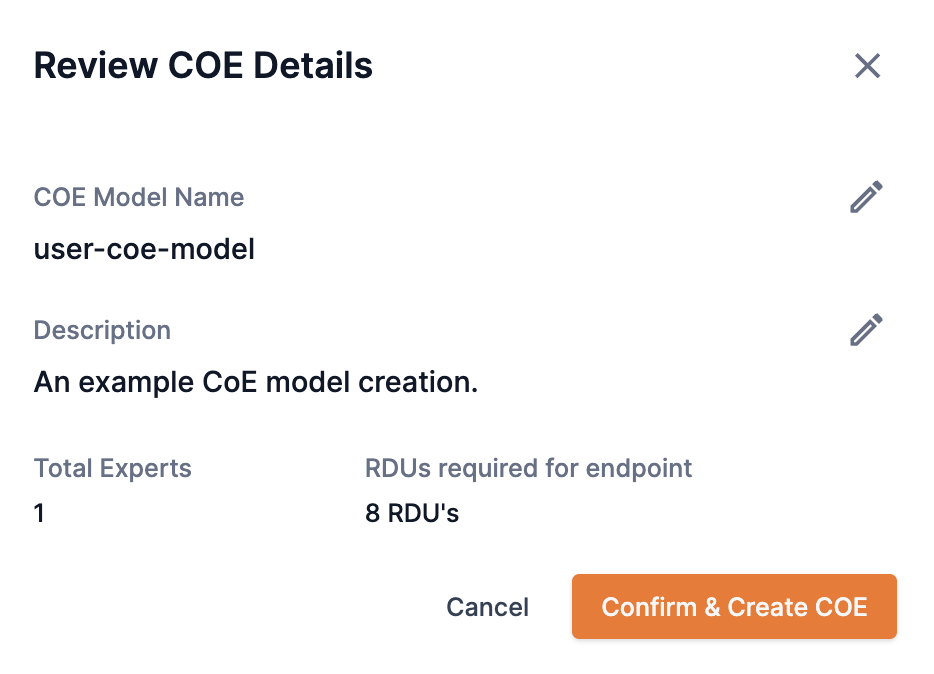

The Review CoE details box will open. Here you can review and make edits to your CoE Model name or Description.

-

Click the edit icon to edit the CoE Model name or Description.

-

Click Confirm & create CoE to create your new CoE model.

Once you confirm the creation of your CoE model, the name and description cannot be edited.

Figure 6. Review CoE details box

Figure 6. Review CoE details box

-

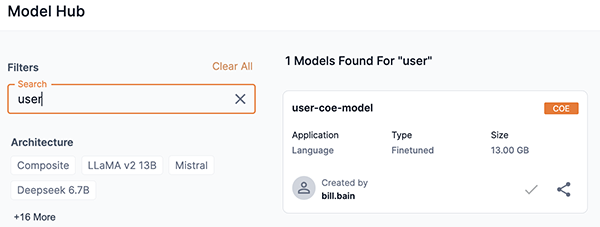

View your CoE model card

After you have created your CoE model you can view its model card by selecting it from the Model Hub. From the Model Hub, click your CoE model preview.

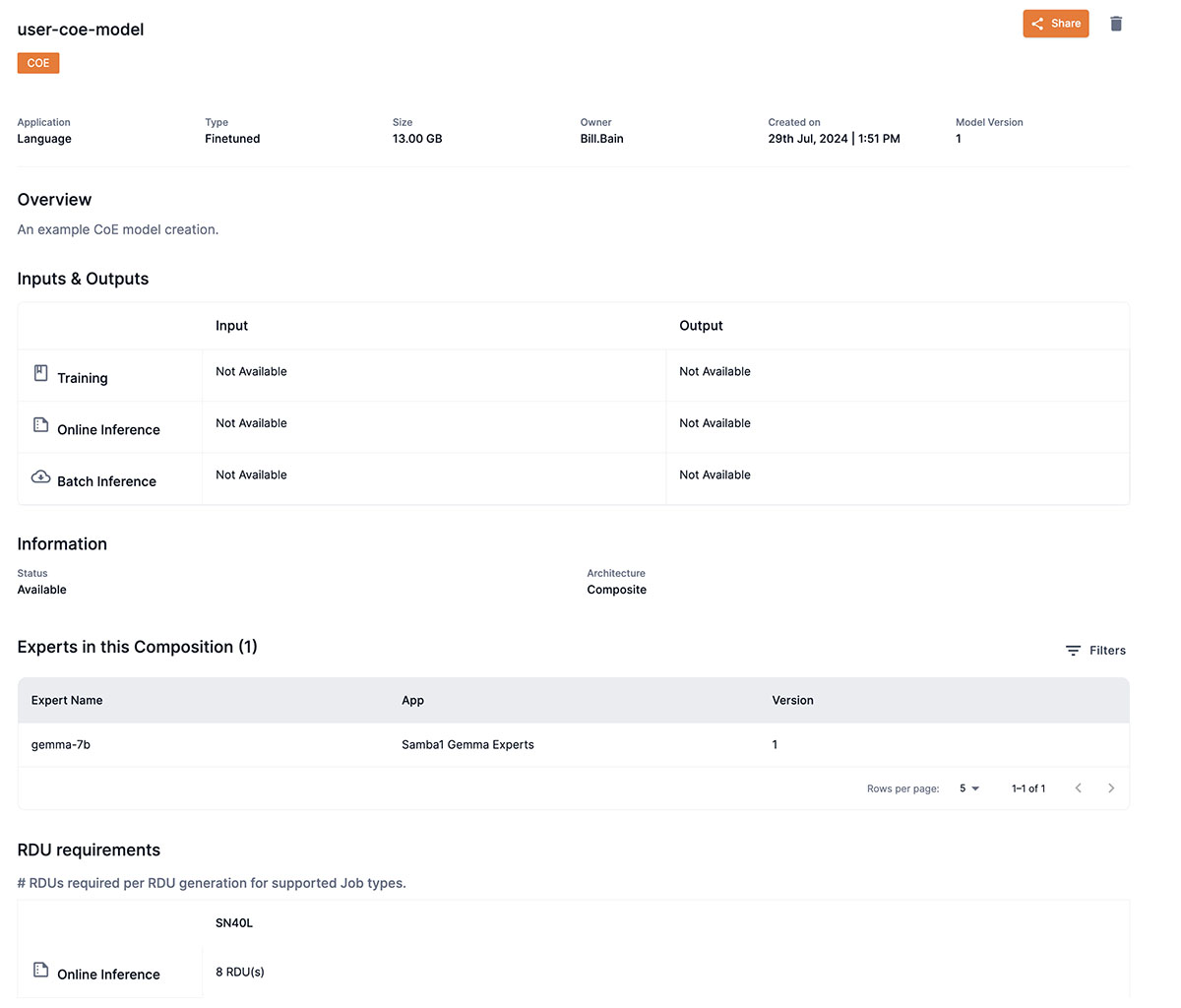

Your CoE model card will open providing detailed information about that model including:

-

Application denotes the model’s application type of Language.

-

Type displays your CoE model type.

-

Owner denotes the CoE model owner.

-

Overview provides the model description you entered.

-

Experts in this Composition lists the expert models used to create your CoE model.

-

You can adjust the number of rows displayed and page through the list.

-

Hover over each expert to view its description.

-

-

Model version displays the selected version of the expert model used when creating the CoE.

-

-

The supported SambaNova hardware generation (SN40L) for each job type.

-

The minimum number of RDUs required for each job type.

-

Delete your CoE model

|

CoE models can be deleted by the model creator, organization administrators (OrgAdmin), and tenant administrators (TenantAdmin). |

Follow the steps below to delete your CoE model.

-

Select your CoE model card from the Model Hub.

-

Click the trash icon to the right of your CoE model name.

-

It is not possible to delete a CoE model when it has associated endpoints. A warning statement will appear identifying the associated endpoints that will need to be deleted before the CoE model can be deleted.

-

You can click the blue endpoint name in the list to open its Endpoint window and view detailed information about it.

-

Click X in the upper-right corner to close the warning statement.

-

-

If your CoE model has no associated endpoints, click Yes from the Delete model box to permanently remove your CoE model from the Model Hub and make it no longer available for use.

Create an endpoint using your CoE model

Once you have a created your CoE model, you can use your model to create and deploy a CoE endpoint for prediction and Playground use.

See Create an endpoint to learn how to create and deploy your CoE endpoint.

Interact with your deployed CoE endpoint

Once your CoE endpoint has been deployed, you can interact with it as demonstrated in the example curl request below.

curl -X POST \

-H 'Content-Type: application/json' \

-H 'key: <your-endpoint-key>' \

--data '{"inputs":["{\"conversation_id\":\"conversation-id\",\"messages\":[{\"message_id\":0,\"role\":\"user\",\"content\":\"\"}]}"],"params":{"do_sample":{"type":"bool","value":"true"},"max_tokens_to_generate":{"type":"int","value":"1024"},"process_prompt":{"type":"bool","value":"true"},"repetition_penalty":{"type":"float","value":"1"},"select_expert":{"type":"str","value":"llama-2-7b-chat-hf"},"stop_sequences":{"type":"str","value":""},"temperature":{"type":"float","value":"0.7"},"top_k":{"type":"int","value":"50"},"top_p":{"type":"float","value":"0.95"}}}' '<your-sambastudio-domain>/api/predict/nlp/<project-key>/<endpoint>'The example above makes a call to the llama-2-7b-chat-hf expert via the property "select_expert":{"type":"str","value":"llama-2-7b-chat-hf"}.

Use your CoE endpoint in the Playground

The Playground provides an in-platform experience for generating predictions using your deployed CoE endpoint.

-

See Use the Playground editor to learn how to use the editor with your Samba-1 endpoint.

-

Select a CoE expert or router describes how to choose a specialized CoE expert to use with your prompt in the Playground.

-

To get optimal responses from a task-specific CoE expert in the Playground, use the corresponding template to format your inputs.