Models on RDU hardware: The basics

Few SambaNova customers create a model from scratch, though we offer some simple sample code to illustrate how to do that. Most customers either want to run an existing PyTorch model on RDU, or use a Hugging Face model on RDU:

-

Run PyTorch models on RDU. If you have a PyTorch model and want to run the model on RDU, most of your code just works but you have to make a few changes. We show revised code and discuss the main steps in Convert a simple model to SambaFlow. That tutorial includes a pointer to existing code, a solution that uses a SambaNova loss function, and a solution that uses an external loss function.

-

Use pretrained models on RDU. You can compile a pretrained model on RDU and use it with your own data. We’re working on an example for that workflow. Some aspects of running your model on RDU are different from running in a more traditional CPU or GPU environment. This doc page gives some background and points to more information.

This doc set includes a tutorial that uses a Hugging Face GPT-2 model. It includes code in our public GitHub repo and the following doc:

-

Compile, train, and perform inference with a Hugging Face GPT model. Learn about data preparation, model download, and how do compliation, training, and inference.

-

Code elements of the training program. Examine the code for training (compile and run).

-

Code elements of the inference program. Examine code for inference on new data (compile and run).

-

You might find some of our background materials interesting, for example the white paper Accelerated computing with a Reconfigurable Dataflow Architecture.

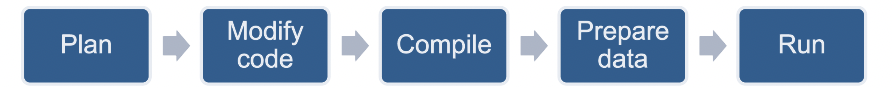

SambaNova workflow

When you want to run a model on SambaNova hardware, the typical workflow is the following:

-

Start with one of our tutorials on GitHub or examine Convert a simple model to SambaFlow to understand model conversion.

-

Modify your code. In Convert a simple model to SambaFlow we have two examples:

-

Functions and changes uses an included loss function.

-

Model with external loss function is the same converted model but uses an external loss function.

-

-

Compile your model. The output of the compilation is a PEF file, a binary file that contains the full details of the model that can be deployed onto an RDU. See Compile and run your first model.

-

Prepare the data you want to feed to your compiled model. The Intermediate model tutorial has an example. See also the data preparation scripts in our public GitHub repository

.

-

Run the model, passing in the PEF file.

-

Compile the model for inference. This step isn’t necessary for small example models, but essential for large model. Compile for inference takes less time and fewer resources.

-

Run inference. What inference means depends on your model. In our examples, the lenet model predicts label for the test dataset of the Fashion MNIST database and the transformer model performs sentiment analysis (after being trained on a movie review dataset).

PyTorch models and SambaFlow models

If you already have a model that runs well on RDU, the changes you have to make to that model are fairly minimal. Here are some resources:

-

Convert a simple model to SambaFlow — Detailed comparison of a PyTorch model and a corresponding SambaFlow model.

-

API Reference

. SambaFlow API reference.

-

SambaNova PyTorch operator support — Table of supported PyTorch operators. This table is updated with each release and includes fully supported and experimental (not fully tested) operators.