Get Started with SambaTune

SambaTune is a tool for profiling, debugging and tuning performance of applications that are running on the SambaNova DataScale® hardware. The tool helps you find bottlenecks and improve performance.

SambaTune automates:

-

Collection of hardware performance counters

-

Metrics aggregation

-

Report generation and visualization

-

Benchmarking of the application to compute average throughput over several runs.

SambaTune in the SambaNova workflow

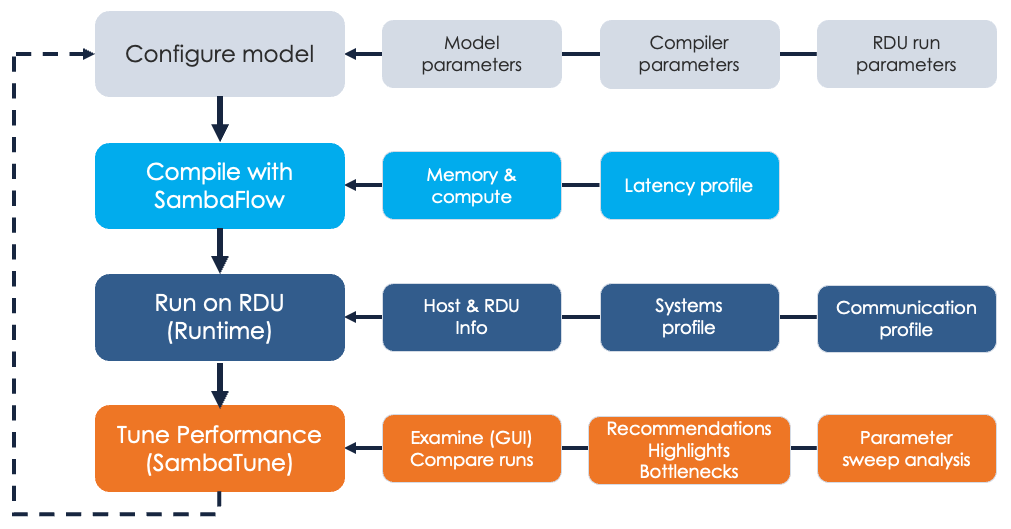

Here’s an overview of how SambaTune fits into the SambaNova workflow:

At the top level, developing and optimizing a model is an interative process that includes 4 steps:

-

First, you download or create the model.

-

You can start with an existing PyTorch model and make a few code changes, or start from scratch.

-

As part of this first step, you consider model parameters, compiler parameters, and run (train) parameters to use and might do test runs.

-

-

Next, you compile the model. During compilation, the compiler decides how to use the memory and compute units. The output of compilation is a PEF file, which you can then use to run the model.

-

After compilation, you run the model in training mode. You feed in the PEF file and your training data.

-

You can now do a SambaTune run of the model to see model characteristics.

-

Look at a single run or compare model runs.

-

Find out if bottlenecks are on the host or the RDU.

-

At the top of each GUI tab, look at Diagnoses for likely areas of improvements.

-

Optionally use Hypertuner (Beta) for a parameter sweep analysis.

-

Users report that SambaTune has helped them with performance bottleneck analysis and tuning and make them successful with SambaNova.

To learn about a typical SambaTune workflow, see Workflow overview.