Samba-1-Chat-Router

The Samba-1-Chat-Router is a composition of 7B parameter models that provides a lightweight, benchmark winning single model experience. The Samba-1-Chat-Router is derived from Samba-CoE-v0.3, a scaled down version of Samba-1

that leverages the Composition of Experts (CoE) architecture. Samba-CoE-v0.3 demonstrates the power of CoE and the sophisticated routing techniques that tie the experts together into a single model.

Tasks

The Samba-1-Chat-Router can be used primarily for chat purposes, covering a diverse range of subjects including high school and college-level sciences, humanities, and professional disciplines such as medicine, law, and engineering. It can also be used for general language tasks such as common sense reasoning, summarization, translation, and writing.

Router attributes

The table below describes the experts and components available in the Samba-1-Chat-Router.

| Version | Experts | Component | Mode |

|---|---|---|---|

Samba-1-Chat-Router-V1 |

|

KNN-Classifier-Samba-1-Chat-Router |

Inference |

Inference inputs

Inference inputs for the Samba-1-Chat-Router are described below. See the Online generative inference section of the API document for request format details.

-

Number of inputs: 1

-

Input: Prompt or query

-

Input type: Text

-

Input format: String

["What are some common stocks?"]Inference outputs

Inference outputs for the Samba-1-Chat-Router are described below. See the Online generative inference section of the API document for request format details.

-

Number of outputs: 1

-

Output: Model response

-

Output type: Text

-

Output format: List of tokens

[

"\n",

"\n",

"Answer",

":",

"The",

"capital",

"of",

"Austria",

"is",

"Vienna",

"(",

"G",

"erman",

":",

"Wien",

")."

],Inference hyperparameters and settings

The table below describes the inference hyperparameters and settings the Samba-1-Chat-Router. See the Online generative inference section of the API document for basic parameter details.

| Attribute | Type | Description | Allowed values |

|---|---|---|---|

|

Boolean |

Allows for the user to specify the complete prompt construction. If this value is unset, the prompt construction elements such as the start and stop tag, bot and assistant tag, and default system prompt will be constructed automatically. |

true, false |

|

Boolean |

If set to true, the endpoint will not run completion and simply return the number. |

true, false |

|

String |

Manually select which expert model to invoke. |

|

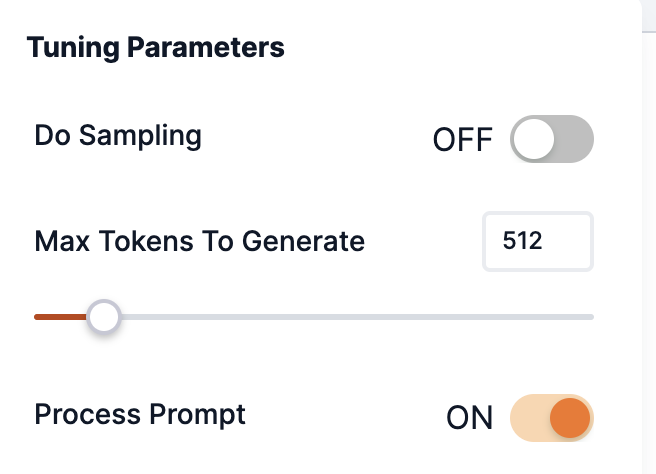

Samba-1-Chat-Router in the Playground

The Playground provides an in-platform experience for generating predictions using the Samba-1-Chat-Router. Select a CoE expert or router describes how to choose the Samba-1-Chat-Router to use with your prompt in the Playground.

|

When selecting an endpoint deployed from Samba-1-Chat-Router-V1, please ensure that Process prompt is turned On in the Tuning Parameters.

|