Hypertuner (Beta)

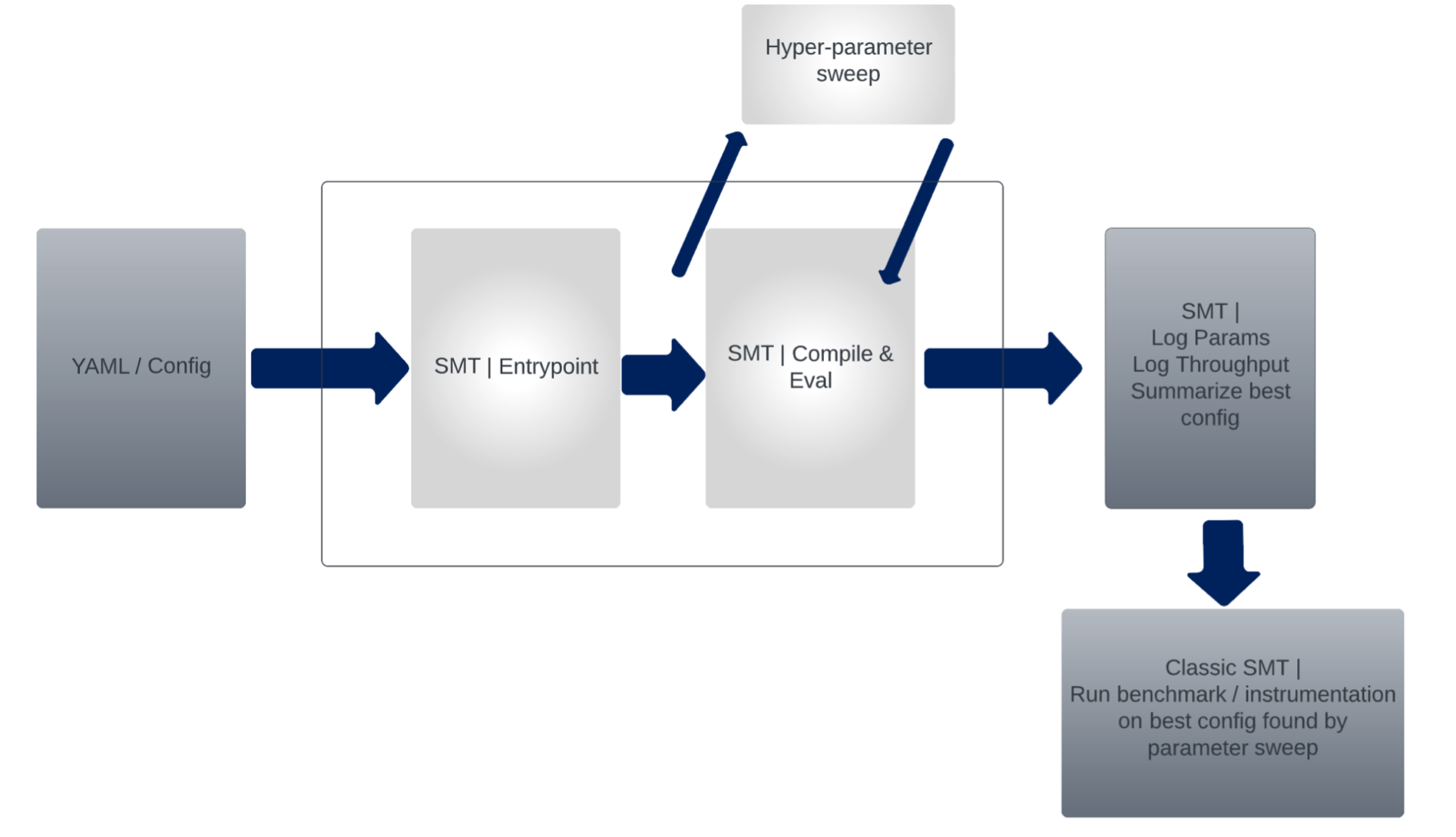

Hypertuner, which is part of SambaTune, enables fine-tuning the model configuration.

-

When you run SambaTune, it runs the model with the model arguments.

-

Hypertuner takes this one step further. It runs the model with a combination of the model arguments and sweep arguments. For the sweep arguments, Hypertuner combines the specified options to run the model with multiple combinations of arguments.

For example, of you specify the following sweep parameters, Hypertuner runs the model with all possible combinations of batch-size and num-spatial-batches.

`

samba-sweep-parameters:

batch-size: [1, 4, 10]

num-spatial-batches : [1, 2, 3]

`

Hypertuner can help you improve model performance by comparing the different combinations of batch size and number of batches.

Caveats

-

Use Hypertuner if you’re familiar with the model and the arguments it uses. If you’re not familiar with the model, you should perform a sweep with a valid range of arguments for your model.

-

Hypertuner provides initial values for best performance, but additional work will have to be done to complete optimizing your model.

-

The

parameter-sweep-batchargument is not currently implemented. -

The Best Compiler Config tab in Parameter Sweep section of the SambaTune GUI is expected to display no data in this BETA version. Your sweep run is successful even if this tab shows now data.

Hypertuner YAML configuration file

The YAML configuration file supports a set of schema for running SambaTune in parameter-sweep mode.

| Option | Description | Default |

|---|---|---|

parameter-sweep-iterations |

Number of configuration value combinations that a user wants to sweep on. For example, if you set |

100 |

parameter-sweep-batch |

Number of search configurations to run in one batch. IMPORTANT : Currently this argument is not supported |

Not yet supported |

parameter-sweep-method |

Method that defines how to make the combinations. The current available option for this argument is |

random |

You can combine the parameters into a model sweep configuration file. Here’s an example of a linear-samba-sweep.yaml file.

app: /opt/sambaflow/apps/micros/linear_net.py

compile-args: --plot

model-args: -b 512 -mb 64 --in-features 2560 --out-features 256

parameter-sweep-iterations: 6

samba-sweep-parameters:

batch-size: [1, 4, 10]

num-spatial-batches : [1, 2, 3]Run HyperTuner

To run Hypertuner, pass in the parameter-sweep option.

For example:

$ sambatune -m "parameter-sweep" -- linear-samba-sweep.yaml

The extra space after -- is required.

|

| Parameters sweeping can trigger some unstable configurations to be searched. Hypertuner discards those configurations. |

Model and Sweep arguments

The model can be sweep-optimized based on what is exposed by the model. HyperTuner can usually guess the arguments to sweep over, but doesn’t know these arguments ahead of time.

The following arguments are supported with Hypertuner:

| Arguments for running the model | Default value | Type |

|---|---|---|

batch-size |

1 |

UINT16 |

data-parallel |

FALSE |

BOOL |

tensor-parallel |

None |

(None, batch, weight) |

mapping |

section |

(section,Spatial) |

microbatch-size |

1 |

UINT16 > 0 |

num-spatial-batches |

1 |

UINT16 > 0 |

fp32-params |

FALSE |

BOOL |

dropout-tiling-legalizer-error |

FALSE |

BOOL |

max-tiling-depth |

None |

UINT16 |

Examine Hypertuner reports from the GUI

| In this Beta release, some of the Parameter Sweep tabs don’t have useful information yet. |

To view reports with Hypertuner information:

-

Install SambaTune and the SambaTune GUI. See Install SambaTune

-

Run your model with SambaTune. See Run SambaTune.

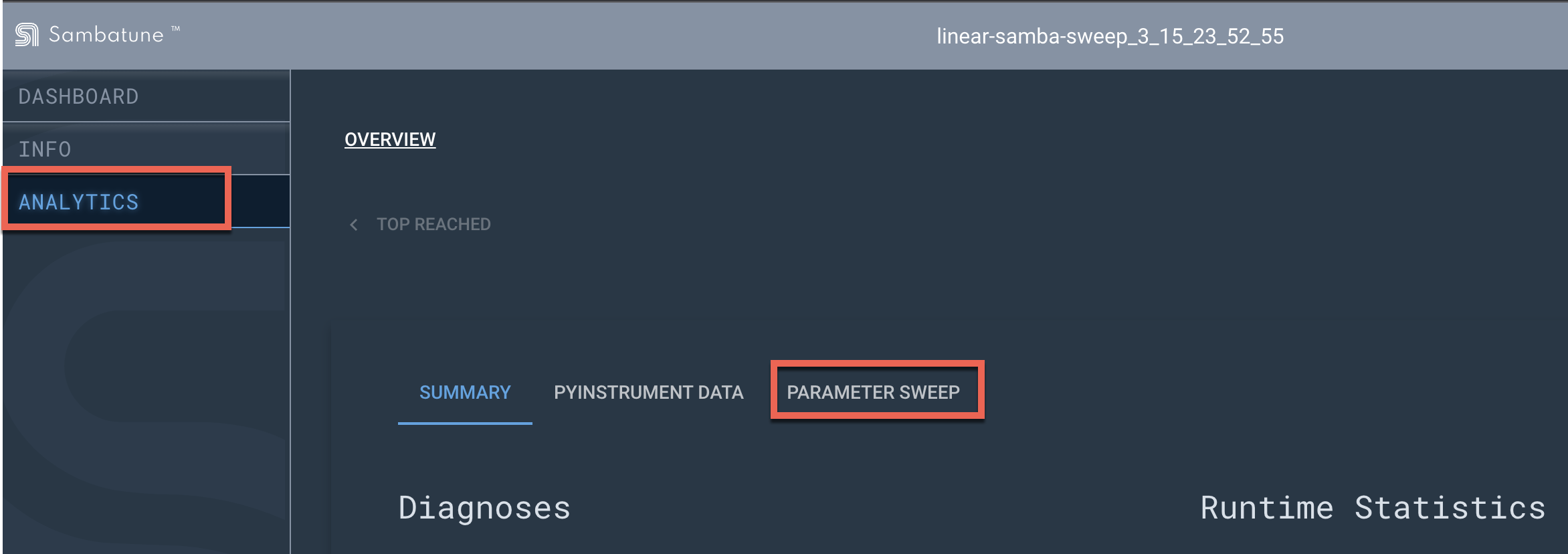

To To examine the parameter sweep results:

| No. | Description | Screenshot |

|---|---|---|

1 |

Start the GUI and select the model you ran with Hypertuner |

|

2 |

Click Analytics on the left, and then select the Parameter-Sweep tab. |

|

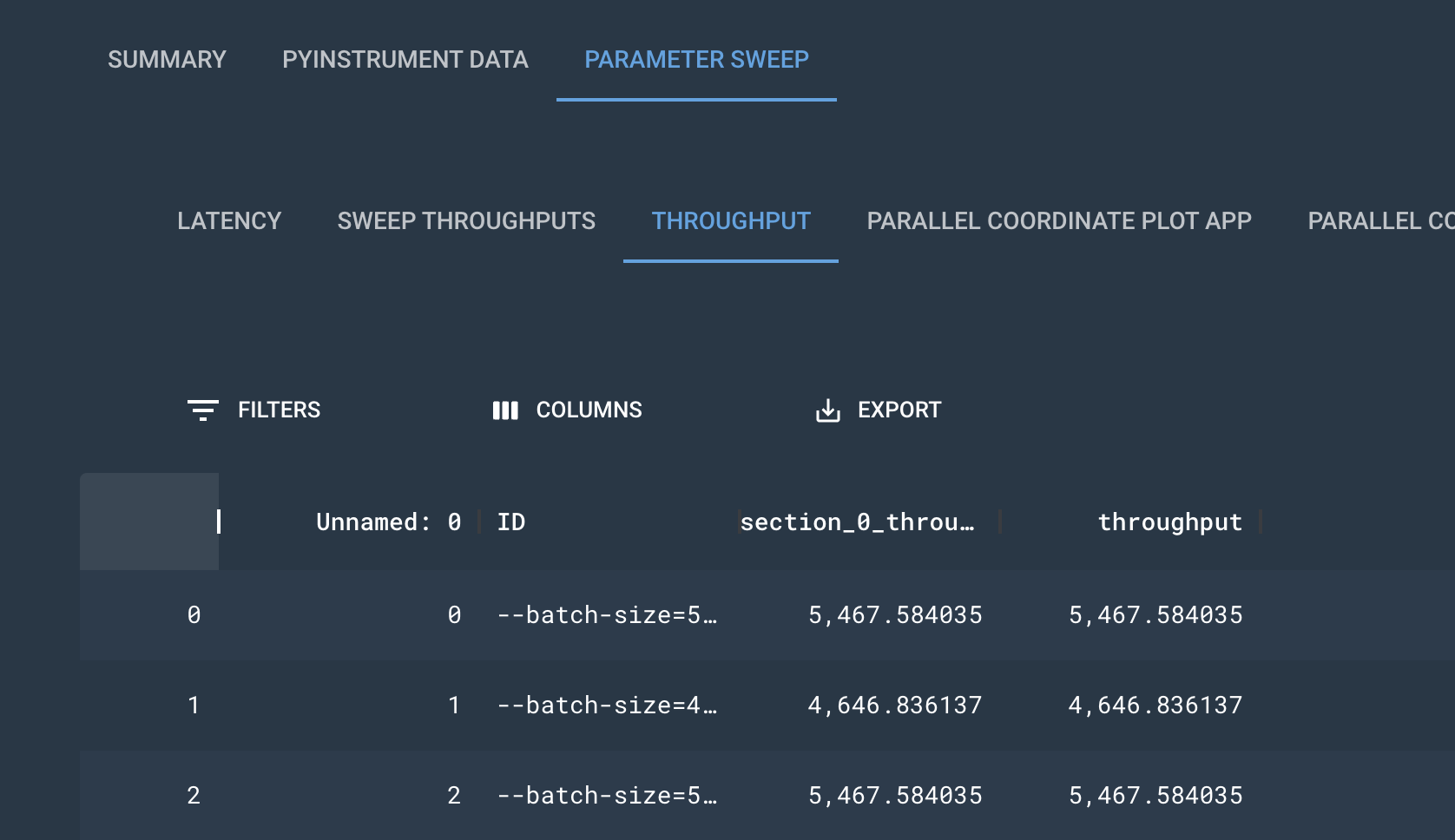

3 |

Click the different tabs to explore the parameter sweep runs. For example, click Throughput to see the different parameter compinations in different rows, and explore which had the best throughput. |

|

4 |

Click Throughput vs. Config to examine which selections had the highest values. |

|