Batch inference

Batch inference is the process of generating predictions on a batch of observations. Within the platform, you can generate predictions on bulk data by creating a batch inference job.

This document describes how to:

Create a batch inference job using the GUI

Create a new batch inference using the GUI job by following the steps below.

-

Create a new project or use an existing one.

-

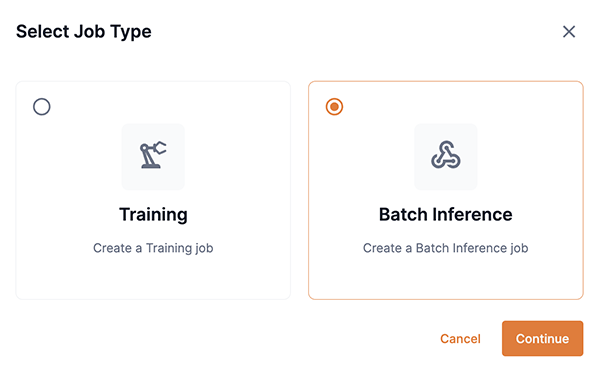

From a project window, click New job. The Select a job type window (Figure 1) will appear.

-

Select Batch inference under Select a job type, as shown in Figure 1 and click Continue. The Create a batch inference window will open.

Figure 1. Select job type

Figure 1. Select job type

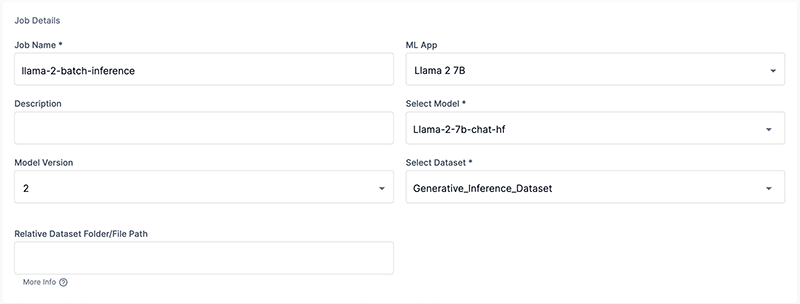

Job details pane

The Job details pane in the Create a batch inference window is the top pane that allows you to setup your batch inference job.

-

Enter a name for the job into the Job Name field, as shown in Figure 2.

-

Select the ML App from the ML App drop-down, as shown in Figure 2.

The ML App selected will refine the models displayed, by corresponding model type, in the Select model drop-down.

-

From the Select model drop-down in the Job details pane (Figure 2), choose My models, Shared models, SambaNova models, or Select from Model Hub.

The available models displayed are defined by the previously selected ML App drop-down. If you wish to view models that are not related to the selected ML App, select Clear from the ML App drop-down. Selecting a model with the ML App drop-down cleared, will auto populate the ML App field with the correct and corresponding ML App for the model.

-

My models displays a list of models that you have previously added to the Model Hub.

-

Shared models displays a list of models that have been assigned a share role.

-

SambaNova models displays a list of models provided by SambaNova.

-

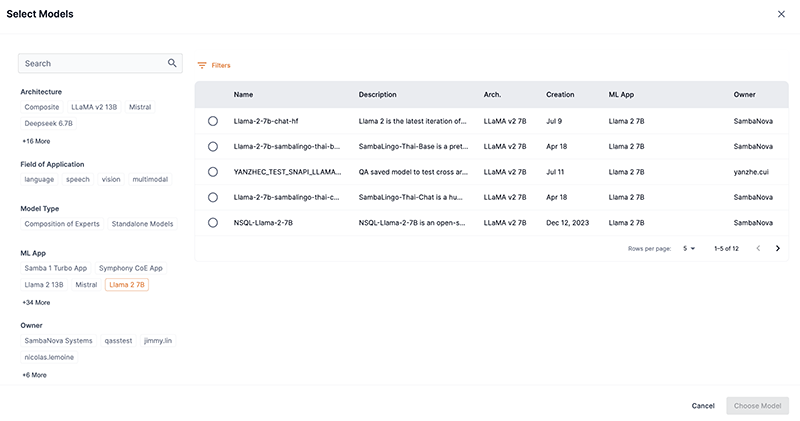

Select from Model Hub displays a window with a list of downloaded models that correspond to a selected ML App, as shown in Figure 3, or a list of all the downloaded models if an ML App is not selected. The list can be filtered by selecting options under Field of application, ML APP, Architecture, and Owner. Additionally, you can enter a term or value into the Search field to refine the model list by that input. Select the model you wish to use and confirm your choice by clicking Choose model.

Figure 3. Select from Model Hub

Figure 3. Select from Model Hub

-

-

In the Job details pane, select the version of the model to use from the Model version drop-down, as shown in Figure 2.

-

From the Select dataset drop-down in the Job details pane (Figure 2), choose My datasets, SambaNova datasets, or Select from datasets.

-

My datasets displays a list of datasets that you have added to the platform and can be used for a selected ML App.

-

SambaNova datasets displays a list of downloaded SambaStudio provided datasets that correspond to a selected ML App.

-

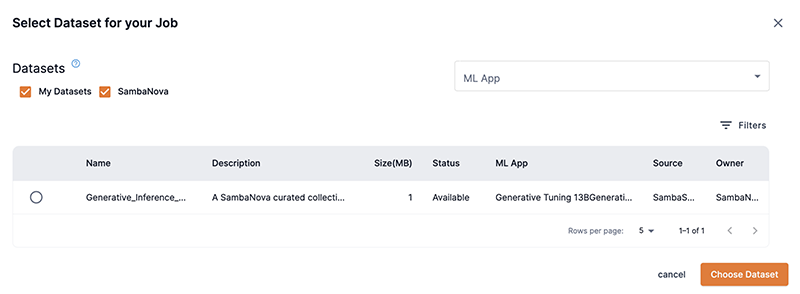

Select from datasets displays a window with a detailed list of downloaded datasets that can be used for a selected ML App, as shown in Figure 4. The My datasets and SambaNova checkboxes filter the dataset list by their respective group. The ML App drop-down filters the dataset list by the corresponding ML App. Select the dataset you wish to use and confirm your choice by clicking Choose dataset.

Figure 4. Select from datasets

Figure 4. Select from datasets

-

-

In the Relative dataset folder/file path field (Figure 2), specify the relative path from storage to the folder that contains the data for batch inference.

RDU requirements pane

The RDU requirements pane will display after selecting a model. This section allows you to configure how the available RDUs are utilized.

|

Contact your administrator for more information on RDU configurations specific to your SambaStudio platform. |

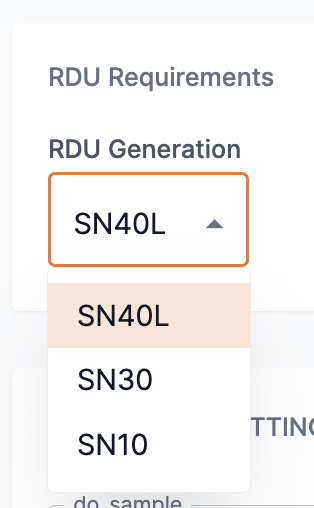

- RDU generation drop-down

-

The RDU generation drop-down allows users to select an available RDU generation version to use for a batch inference job. If more than one option is available, the SambaStudio platform will default to the recommended RDU generation version to use based on your platform’s configuration and the selected model. You can select a different RDU generation version to use, if available, than the recommended option from the drop-down.

Figure 5. RDU requirements

Figure 5. RDU requirements

Inference settings pane

Set the inference settings to to optimize your batch inference job for the input data or use the default values.

-

Expand the Inference settings pane by clicking the double arrows to set and adjust the settings.

-

Hover over a parameter to view its brief description.

Run the batch inference job

Click Run job at the bottom of the Create batch inference window to submit your batch inference job.

|

Batch inference jobs are long running jobs. The time they take to run to completion is heavily dependent on the task and the number of input samples. |

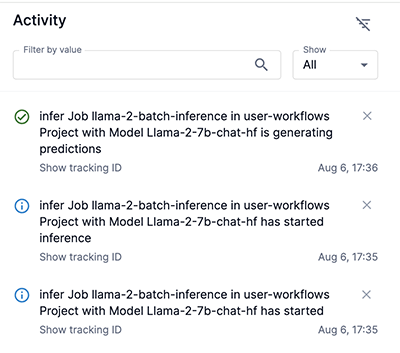

Activity notifications

The Activity panel displays notifications relevant to a specific job and can be accessed from the job’s Batch inference window.

-

Navigate to the Batch inference details window from the Dashboard or from its associated Project window. Click Activity at the top of the Batch inference details window to open the Activity panel.

Figure 8. Activity at top of Batch inference job details

Figure 8. Activity at top of Batch inference job details -

The Activity panel displays notifications specific to the corresponding batch inference job.

-

Similar to the platform Notifications panel, notifications are displayed in a scrollable list and include a detailed heading, a tracking ID, and a creation timestamp.

-

Click Show tracking ID to view the tracking ID for the corresponding notification.

-

Click the copy icon to copy the tracking ID to your clipboard.

Figure 9. Batch inference job Activity panel

Figure 9. Batch inference job Activity panel

-

Create a batch inference job using the CLI

The example below demonstrates how to create a batch inference job using the snapi job create command. You will need to specify the following:

-

A project to assign the job. Create a new project or use an existing one.

-

A name for your new job.

-

Use batch_predict for the --type input. This designates the job to be a batch inference job.

-

A model to use for the model-checkpoint input.

-

A dataset to use for the dataset input.

-

The RDU architecture generation version to use of your SambaStudio platform configuration for the --arch input.

-

Run the snapi tenant info command to view the available RDU generation version(s) specific to your SambaStudio platform. Contact your administrator for more information on RDU configurations specific to your SambaStudio platform.

-

Run the snapi model info command to obtain the --arch input compatible for the selected model.

The dataset must be compatible with the model you choose.

-

$ snapi job create \

--project <project-name> \

--job <your-new-job-name> \

--type batch_predict \

--model-checkpoint <model-name> \

--dataset <dataset-name>

--arch SN10Example snapi model info command

The example snapi model info command snippet below demonstrates where to find the compatible --arch input for the GPT_13B_Base_Model when used in a batch inference job. The required value is located on the last line of the example snippet and is represented as 'batch_predict': { 'sn10'. Note that this example snippet contains only a portion of the actual snapi model info command response. You will need to specify:

-

The model name or ID for the --model input.

-

Use batch_predict for the --job-type input. This returns the 'batch_predict': { 'sn10' value, which would be entered as --arch SN10 into the snapi job create command.

Click to view the example snapi model info command snippet.

$ snapi model info \

--model GPT_13B_Base_Model \

--job-type batch_predict

Model Info

============

ID : 61b4ff7d-fbaf-444d-9cba-7ac89187e375

Name : GPT_13B_Base_Model

Architecture : GPT 13B

Field of Application : language

Validation Loss : -

Validation Accuracy : -

App : 57f6a3c8-1f04-488a-bb39-3cfc5b4a5d7a

Dataset : {'info': 'N/A\n', 'url': ''}

SambaNova Provided : True

Version : 1

Description : This is a randomly initialized model, meant to be used to kick off a pre-training job.

Generally speaking, the process of pre-training is expensive both in terms of compute and data. For most use cases, it will be better to fine tune one of the provided checkpoints, rather than starting from scratch.

Created Time : 2023-03-23 00:00:00 +0000 UTC

Status : Available

Steps : 0

Hyperparameters :

{ 'batch_predict': { 'sn10': { 'imageVariants': [],Quit or delete a batch inference job

Follow the steps below to quit or delete a batch inference job.

Option 1: From the details window

-

Navigate to the Batch Inference details window from Dashboard or Projects.

-

Click the Quit button to stop the job from running. The confirmation box will open.

-

Click the Delete button to quit and remove the job from the platform. All job related data and export history will permanently be removed from the platform. The confirmation box will open.

-

-

In the confirmation box, click the Yes button to confirm that you want to quit or delete the job.

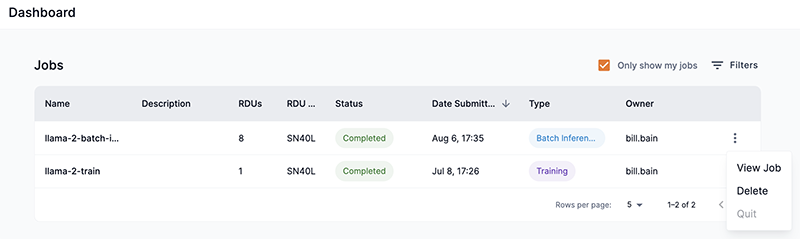

Option 2: From the job list

-

Navigate to the Jobs table from the Dashboard. Alternatively, the Jobs table can be accessed from the job’s associated project window.

-

Click the kebob menu (three dots) in the last column to display the drop-down menu and available actions for the selected job.

-

Click the Delete button to quit and remove the job from the platform. All job related data and export history will permanently be removed from the platform. The confirmation box will open.

-

Click the Quit button to stop the job from running. The confirmation box will open.

-

-

In the confirmation box, click the Yes button to confirm that you want to quit or delete the job.

Access results

After your batch inference job is complete, you can access the results as described below. Results of a completed batch inference job are enclosed in a single file that contains predictions for all input samples.

Download results to your local machine

Follow the steps below to download the results of your batch inference job to your local machine.

|

Downloads are limited to a maximum file size of 2GB. Downloading results larger than 2GB will fail to complete. Use the access from NFS method if the file size exceeds 2GB. |

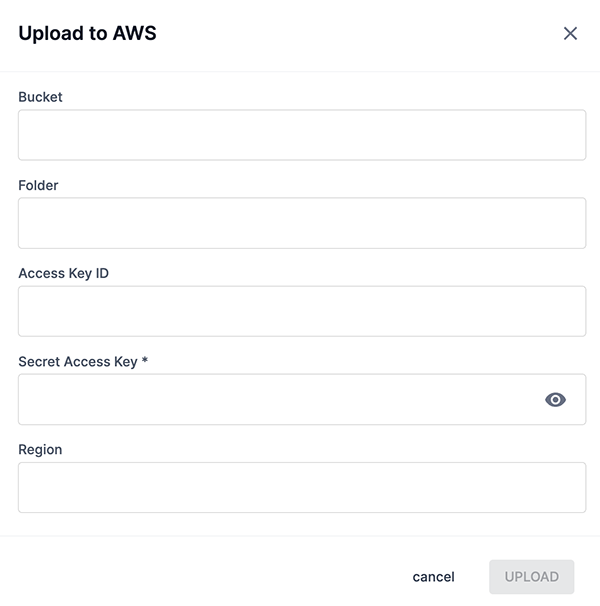

Upload to AWS S3

Follow the steps below to upload results from a batch inference job to a folder in an AWS S3 bucket.

-

Navigate to the Batch Inference detail window from Dashboard or Projects.

-

Click the Upload results to AWS button. The Upload to AWS box will open. Provide the following information:

-

In the Bucket field, input the name of your S3 Bucket.

-

Input the relative path to the dataset in the S3 bucket into the Folder field. This folder should include the required dataset files for the task (for example, the labels, training, and validation files).

-

In the Access key ID field, input the unique ID provided by AWS IAM to manage access.

-

Enter your Secret access key into the field. This allows authentication access for the provided Access Key ID.

-

Enter the AWS Region that your S3 bucket resides into the Region field.

-

|

There is no limit to the number of times results can be uploaded to AWS S3 buckets, but only one upload is allowed at a time. |

Access from NFS

You can access the results from a batch inference job directly from the NFS and copy it to a location of your choice. It is recommended to use this method to download results larger than 2GB to your local machine. Follow the steps below to access results from NFS.

Evaluate the job using the GUI

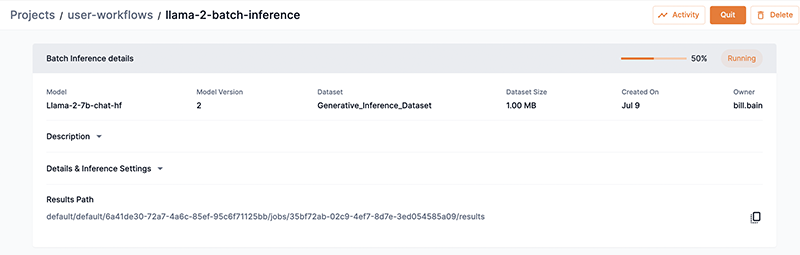

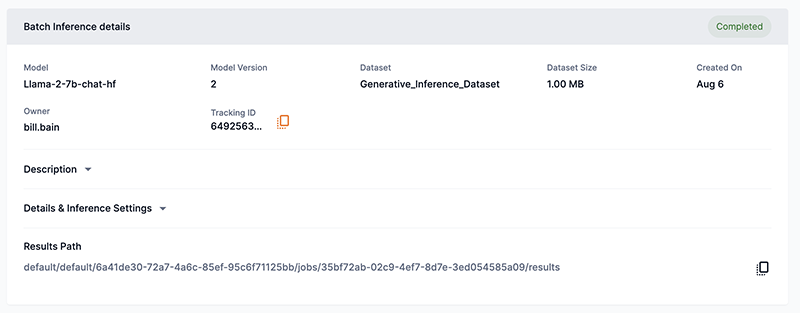

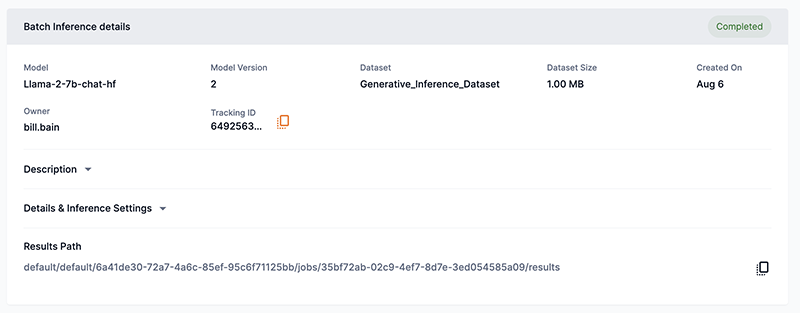

Navigate to the Batch Inference detail window from Dashboard or Projects during the job run (or after its completion) to view job information.

View information

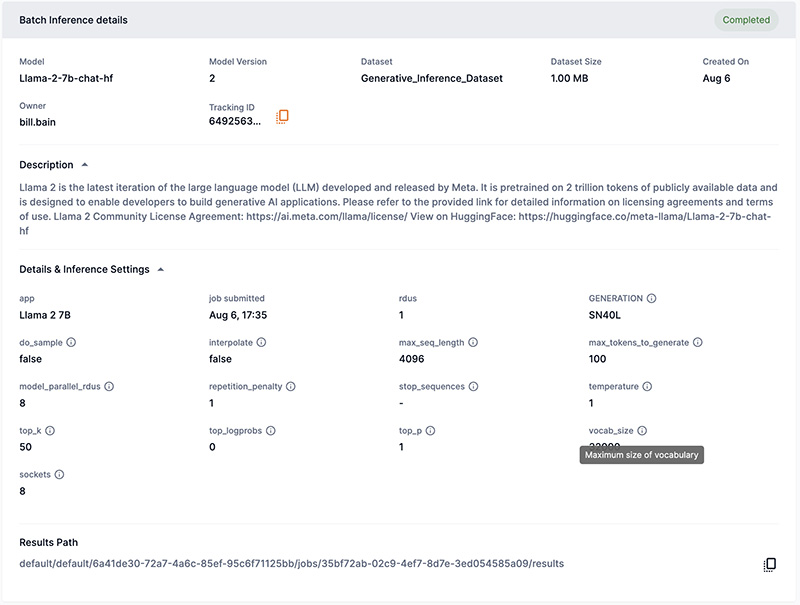

You can view the following information about your batch inference job in the Batch inference details pane.

-

Model displays the model name and architecture used for batch inference.

-

Model version displays the version of the model that was used for the batch inference job

-

Dataset displays the dataset used.

-

Dataset size displays the size of the dataset used.

-

Tracking ID provides a current ID that can be used to identify and report issues to the SambaNova Support team.

-

A Tracking ID is created for each new job.

-

A new Tracking ID will be created if a stopped job is restarted.

-

Only jobs created with, or after, SambaStudio release 24.7.1 will display a Tracking ID.

-

Click the copy icon to copy the Tracking ID number to your clipboard.

-

-

Description displays the a description of the model used.

-

Details & Inference settings displays a snapshot of the batch inference job settings.

-

Results path displays the path to the results file on the mounted NFS.

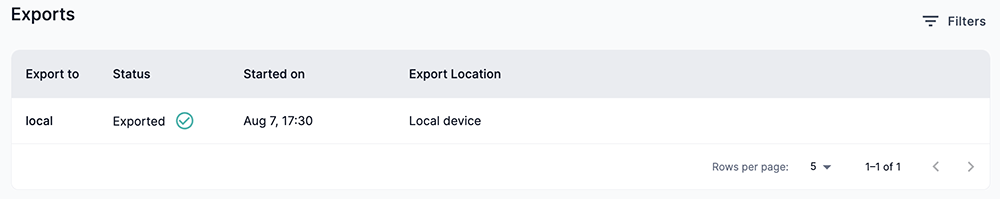

Exports

The exports table displays a list of when and where your batch inference access results were exported.

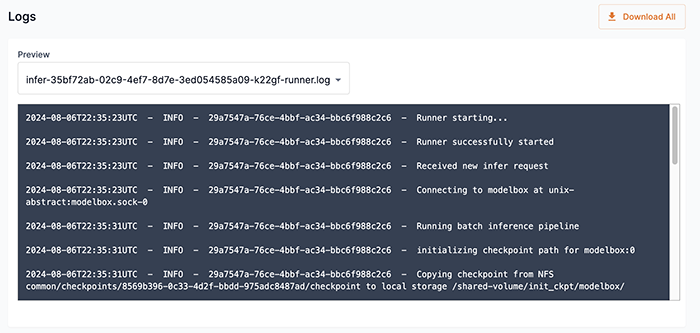

View and download logs using the GUI

The Logs section allows you to preview and download logs of your batch inference job. Logs can help you track progress, identify errors, and determine the cause of potential errors.

|

Logs can be visible in the platform earlier than other data, such as information and job progress. |

-

From the Preview drop-down, select the log file you wish to preview.

-

The Preview window displays the latest 50 lines of the log.

-

To view more than 50 lines of the log, use the Download all feature to download the log file.

-

-

Click Download all to download a compressed file of your logs. The file will be downloaded to the location configured by your browser.

View logs using the CLI

Similar to viewing logs using the GUI, you can use the SambaNova API (snapi) to preview and download logs of your batch inference job.

View the job log file names

The example below demonstrates the snapi job list-logs command. Use this command to view the job log file names of your job. This is similar to using the Preview drop-down menu in the GUI to view and select your job log file names. You will need to specify the following:

-

The project that contains, or is assigned to, the job you wish to view the job log file names.

-

The name of the job you wish to view the job log file names.

$ snapi job list-logs \

--project <project-name> \

--job <job-name>

compile-05a8d29f-aeb2-4ca3-8f1e-7c3b35f8d3a2-5t4v8-runner.log

compile-05a8d29f-aeb2-4ca3-8f1e-7c3b35f8d3a2-5t4v8-model.log

infer-12b3a844-826b-408b-8aae-e05e3149ab32-lgxgq-runner.log

infer-12b3a844-826b-408b-8aae-e05e3149ab32-lgxgq-model.log|

Run |

Preview a log file

After you have viewed the log file names for your job, you can use the snapi job preview-log command to preview the logs corresponding to a selected log file. The example below demonstrates the snapi job preview-log command. You will need to specify the following:

-

The project that contains, or is assigned to, the job you wish to preview the job log file.

-

The name of the job you wish to preview the job log file.

-

The job log file name you wish to preview its logs. This file name is returned by running the

snapi job list-logscommand, which is described above.

$ snapi job preview-log \

--project <project-name> \

--job <job-name> \

--file infer-12b3a844-826b-408b-8aae-e05e3149ab32-lgxgq-runner.log

2023-06-21 14:24:55 - INFO - 621b3e76-34d6-4e19-8751-c38e473805f9 - Runner starting...

2023-06-21 14:24:55 - INFO - 621b3e76-34d6-4e19-8751-c38e473805f9 - Runner successfully started

2023-06-21 14:24:55 - INFO - 621b3e76-34d6-4e19-8751-c38e473805f9 - Received new infer request

2023-06-21 14:24:55 - INFO - 621b3e76-34d6-4e19-8751-c38e473805f9 - Connecting to modelbox at localhost:50061

2023-06-21 14:25:05 - INFO - 621b3e76-34d6-4e19-8751-c38e473805f9 - Running batch inference pipeline

2023-06-21 14:25:05 - INFO - 621b3e76-34d6-4e19-8751-c38e473805f9 - initializing checkpoint path for modelbox:0

2023-06-21 14:25:29 - INFO - 621b3e76-34d6-4e19-8751-c38e473805f9 - initializing metrics for modelbox:0

2023-06-21 14:25:29 - INFO - 621b3e76-34d6-4e19-8751-c38e473805f9 - Staging the dataset

2023-06-21 14:25:33 - INFO - 621b3e76-34d6-4e19-8751-c38e473805f9 - Running inference on pipeline

2023-06-21 14:25:33 - INFO - 621b3e76-34d6-4e19-8751-c38e473805f9 - Running inference pipeline stage 0

2023-06-21 14:25:33 - INFO - 621b3e76-34d6-4e19-8751-c38e473805f9 - Running inference for modelbox

2023-06-21 14:26:50 - INFO - 621b3e76-34d6-4e19-8751-c38e473805f9 - Saving outputs from /shared-volume/results/modelbox/ for node

2023-06-21 14:26:50 - INFO - 621b3e76-34d6-4e19-8751-c38e473805f9 - Saving results file /shared-volume/results/modelbox/test.csv to test.csv|

Run |

Download the logs

Use the snapi download-logs command to download a compressed file of your job’s logs. The example below demonstrates the snapi download-logs command. You will need to provide the following:

-

The project that contains, or is assigned to, the job you wish to download the compressed log file.

-

The name of the job you wish to download the compressed log file.

$ snapi job download-logs \

--project <project-name>> \

--job <job-name>

Successfully Downloaded: <job-name> logs|

The default destination for the compressed file download is the current directory. To specify a destination directory, use the |